Warning: You are viewing an older version of this documentation. Most recent is here: 42.0.0

File Extraction¶

Concept¶

When turned on, file extraction will save on disk the files observed on the wire by each Stamus Network Probe using Suricata signatures.

The files will be extracted and stored locally on the Network Probe performing the extraction and the REST-API on the manager, Stamus Central Server, allows to centrally retrieve chosen files.

As of U38, the currently supported protocols for file extraction are:

HTTP

SMTP

FTP

NFS

SMB

File Extraction Activation¶

To activate file extraction, go under Probe Management, menu Appliances.

Edit the desired Probe, or the desired Template, and go under the Settings tab.

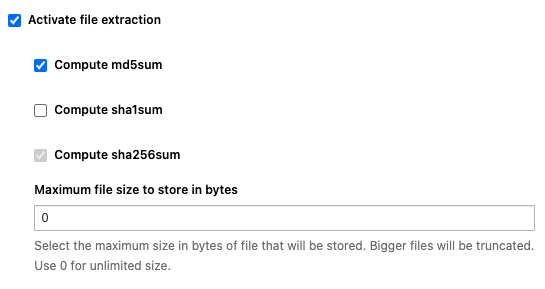

Check the checkbox “Activate file extraction” and a few more options will expand as illustrated by the below screenshot

All extracted files will have their sha256 fingerprint computed, this option cannot be deactivated. This mechanism is used to only store once each and every single file in case of multiple downloads of the same file.

You can then choose to also compute common hashes such as md5 and/or sha1, that is especially useful if you need to integrate with third-party solutions to which you want to send those hashes. This information, as well as the original filename, will be available in the fileinfo structure of the json metadata.

Note

File extraction has a performance impact on the Stamus Probe it is enabled on.

Important

Extracted files are de-duplicated. In other words if the same file is extracted 5 times it will only be saved to disc once on the particular Stamus Probe.

Finally, specify a size limit, in bytes, if you want to disregard large files such as ISO files and Apply changes to make the changes take effect.

Note

When 2 Network Probes, from 2 different capture locations, see the same file, this file will be extracted and stored on each of those 2 Network Probes. The deduplication of files is performed on a per probe basis.

How File Extraction Works¶

File extraction is performed through the usage of Suricata rules and only the rules using the keyword filestore will perform file extraction.

For example, the following rule will extract and de-duplicate all executables observed on the wire that are downloaded via HTTP from the networks $INFRA_SERVERS and $DC_SERVERS (defined as configuration variables):

alert http [$INFRA_SERVERS,$DC_SERVERS] any -> $EXTERNAL_NET any (msg:"FILE Executable public download from critical infra detected"; flow:established,to_server; http.method; content:"GET"; file.mime_type; content:"executable"; filestore; sid:1; rev:1;)

Best Practices recommends to always make rules as specific as possible as illustrated by the previous example in order to optimise performances and reduce false positives.

Note

We recommend using the new file.mime_type keyword instead of file.magic for better performance.

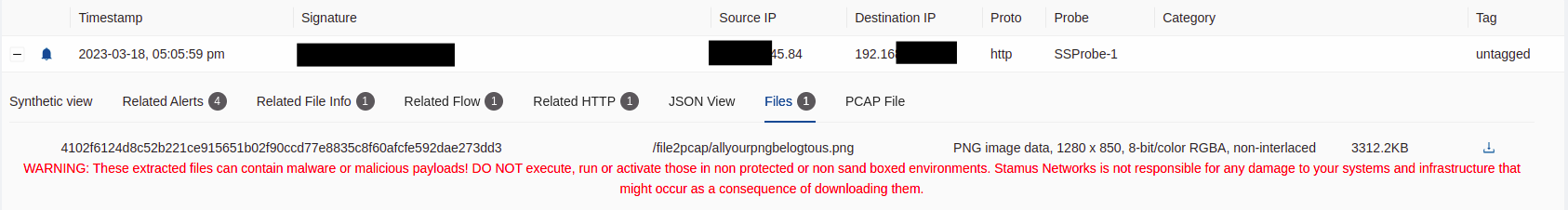

From the Hunting interface, the files will also be accessible directly from the alerts tab and can be downloaded as illustrated below

Important

Some of those files may be potentially dangerous, proceed with caution when downloading them!

Note

When a file has been extracted, the boolean stored in the fileinfo structure (JSON View / Related Events) will be set to true. Note that this indicates that the file was once stored and may have been purged at the time you are looking at this alert. While this is unlikely for recent alerts, it could be the case for the oldest ones. There is a cleaning process that removes the oldest files extracted when the disk space limit is reached (10% of the /var/log/suricata partition)

Hint

If you need to keep files for longer periods, you need to setup a third-party server on which to export regularly the files using the REST-API for example. The partition on which the files are stored on the Network Probes can only be extended on virtual machines.

Getting Files from the REST-API¶

Retrieving a file from the REST-API is a 2 step process. First, we need to instruct the manager, SCS, to retrieve the file from the Network Probe. Once this file has been retrieved on SCS, we can download it.

To query this endpoint, we will need 2 components:

The hash (sha256) of the file to retrieve

The probe name (string) on which the file is stored

First, we can ensure the status of the file using the following cURL command:

curl X GET -k "https://<SCS_ADDRESS>/rest/rules/filestore/<HASH>/status/?host=<PROBE_NAME>" -H "Authorization: Token <TOKEN>" -H 'Content-Type: application/json'

If the file is still present (i.e. not deleted), we will receive the following response:

{"status":"available"}

Now, we first need to instruct SCS to get the file. To do so, we will use the retrieve endpoint:

curl -X GET -k "https://<SCS_ADDRESS>/rest/rules/filestore/<HASH>/retrieve/?host=<PROBE_NAME>" -H 'Authorization: Token <TOKEN>' -H 'Content-Type: application/json'

The file should be downloaded onto SCS and you will get this result

{"retrieve":"done"}

Finally, to download the file, use the download endpoint:

curl -X GET -O -J -k "https://<SCS_ADDRESS>/rest/rules/filestore/<HASH>/download/?host=<PROBE_NAME>" -H "Authorization: Token <TOKEN>" -H 'Content-Type: application/octet-stream'

Hint

In order to get the required metadata to build the complete Rest API call correctly, the alerts rest API endpoint can be used.

This can be achieved by filtering on unique key value pairs like the signature id and the flow id, for example.

https://<SCS ADDRESS>/rest/rules/es/alerts_tail/?qfilter=alert.signature_id:2029743 AND flow_id:2077942411095108

The above specimen will filter all event_type: alerts` on {“signature_id”: 2029743} and {“flow_id”: 2077942411095108} After that information is at hand we can proceed with the same technique this time on the fileinfo event like so:

https://<SCS ADDRESS>/rest/rules/es/events_from_flow_id/?qfilter=flow_id:2077942411095108 AND fileinfo.stored:true

This will provide the file hashes that are required so the actual file is retrieved.

Note

There could be many fileinfo` event types for one single network flow. In a network flow, especially if there are multiple files transferred or associated with the flow, Suricata can generate multiple “fileinfo” events, each corresponding to a different file within that particular flow. Therefore, it’s possible to have multiple “fileinfo” events associated with a single network flow.

An example of a complete python script implementation:

import requests

from requests.packages.urllib3.exceptions import InsecureRequestWarning

import sys

import os

def print_helptext():

if len(sys.argv) < 4:

print(

f"How to run the script: {sys.argv[0]} <hostname/ip of SCS> <token> <sha256 checksum of the file>"

)

print(

f"\nExample: python3 {sys.argv[0]} 192.168.0.12 7408a4b978abdc03ee39e1fea419512e5734f51e 7f87640e9b74a059a10fb37ad083eb0843045493589d5b6c1f971ad2c13ee127"

)

quit()

else:

global url

url = "https://" + sys.argv[1] + "/rest"

global scs_ip

scs_ip = sys.argv[1]

global token

token = sys.argv[2]

global file_hash

file_hash = sys.argv[3]

def check_host_is_up(hostname, waittime=1000):

if (

os.system(

"ping -c 1 -W " + str(waittime) + " " + hostname + " > /dev/null 2>&1"

)

== 0

):

HOST_UP = True

else:

HOST_UP = False

raise Exception("Error. Host %s is not up..." % hostname)

return HOST_UP

def check_url_is_reachable(url):

try:

get = requests.get(url, verify=False)

if get.status_code == 200:

return f"{url}: is reachable"

else:

return f"{url}: is Not reachable, status_code: {get.status_code}"

except requests.exceptions.RequestException as e:

raise Exception(f"{url}: is Not reachable \nErr: {e}")

def check_request(response):

if response.status_code == 200 or 201:

print("Request is successful!")

print("Response:")

print(response.text)

else:

print(f"Request failed with status code: {response.status_code}")

print(response.text)

class RestCall:

def __init__(self, token, url):

self.token = token

self.url = url

self.headers = {"Authorization": f"Token {self.token}"}

requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

verify = (

True # Change this to False if you use https with a self signed certificate

)

def get_filehashes_exist(self, file_hash):

print("INFO: Get the file hashes from event type fileinfo")

rest_point = f"/rules/es/events_tail/?qfilter=event_type:fileinfo AND fileinfo.stored%3Atrue AND fileinfo.sha256:{file_hash}"

response = requests.get(

self.url + rest_point, headers=self.headers, verify=self.verify

)

check_request(response)

response = response.json()

results = response["results"]

if results:

return results[0]["host"]

else:

raise Exception("ERROR: There's no file, in any fileinfo event type, matching the given sha256. Exiting ...")

def get_extracted_file_status(self, file_hash, probe_name):

print("INFO: Get the status of the extracted file")

rest_point = f"/rules/filestore/{file_hash}/status/?host={probe_name}"

response = requests.get(

self.url + rest_point, headers=self.headers, verify=self.verify

)

check_request(response)

response = response.json()

return response

def retrieve_extracted_file(self, file_hash, probe_name):

print("ACTION: Retrieve the extracted file")

rest_point = f"/rules/filestore/{file_hash}/retrieve/?host={probe_name}"

response = requests.get(

self.url + rest_point, headers=self.headers, verify=self.verify

)

check_request(response)

response = response.json()

return response

def download_extracted_file(self, file_hash, probe_name):

print("ACTION: Download extracted file")

rest_point = f"/rules/filestore/{file_hash}/download/?host={probe_name}"

response = requests.get(

self.url + rest_point, headers=self.headers, verify=self.verify

)

check_request(response)

response = response.text

extracted_file = file_hash + ".data"

with open(extracted_file, "w") as file:

file.write(response)

return response

if __name__ == "__main__":

print_helptext()

SCS_Rest = RestCall(token, url)

check_url_is_reachable(url)

probe_name = SCS_Rest.get_filehashes_exist(file_hash)

SCS_Rest.get_extracted_file_status(file_hash, probe_name)

SCS_Rest.retrieve_extracted_file(file_hash, probe_name)

SCS_Rest.download_extracted_file(file_hash, probe_name)