AI MCP¶

The Model Context Protocol (MCP) is an open-standard, open-source specification developed by Anthropic in late 2024.With a shared standard, different AI systems, tools, or data stores can interoperate more easily, meaning developers can build tools once and have them work across many contexts.

MCP is designed to standardize how large language models (LLMs) and other AI agents obtain context (data, tools, external resources) and interact with external systems. Instead of creating a bespoke integration for each model-tool pair, MCP provides a common protocol so different models, tools, and data sources can plug into a shared framework. It is often compared to USB-C for AI — like USB-C standardizes how devices and peripherals connect, MCP aims to standardize how AI models connect to tools and data. AI models often are at disadvantage when they don’t have relevant context from external data or tools (past actions, files, external databases). MCP enables providing that context in a structured, on-demand way so the LLM’s model’s output can be more accurate and useful.

How it works¶

There are MCP clients (AI agents or model front-ends) and MCP servers (systems exposing tools, data, or services). The client can request context or invoke tools via servers.

Before MCP, every AI model or agent needed separate connectors/integrations for each tool/data source. So a specific integration per tool was needed. MCP reduces this by allowing one connector to serve many clients.

Data Sovereignty¶

It is important to note that the AI MCP setup also provides for local installation not depending on cloud connections and providers thus providing for data sovereignty.

Attention

When doing integrations between online (cloud based) LLMs services and Clear NDR please adhere to your organization’s data access policies!

Use local installations if needed as opposed to cloud based if data sovereignty is important.

Example setup¶

The set up below describes a working Agent Zero installation and setup sequence and is intended to be used as an example Proof of Concept, production or as a test as well.

Agent Zero is a free and open source autonomous AI agent. It can be used to analyze network data from Clear NDR using MCP. See Agent Zero documentation for more installation instructions.

Please note as result can vary depending on what LLM model is used and when that model gets updated. There are many available - ChatGPT, Gemini, Mistral and other.

Installation¶

This installation assumes a Linux OS.

It is available in a self contained docker container. So installation is straightforward:

docker pull agent0ai/agent-zero

Then to start it, it is convenient to have data in a local directory ex - /home/user/builds/agent-zero-gemini

docker run -p 5051:80 -v /home/user/builds/agent-zero-gemini:/a0 agent0ai/agent-zero

LLM Setup¶

We can setup a local LLM but this requires a lot of resources on the host. So we are going to use chatgpt

To do so we need to set credential then select the method. All is done via settings in left menu:

set the API keys for

OpenAIinExternal services.in agent settings, set

OpenAIprovider andgpt-4.1-mini-2025-04-14as model name forChat Model,Utility Model,Web Browser Model

In Chat model, you may have to set Chat model context length to something like at least 32000 if ever there is error displayed about context length when asking questions.

MCP setup¶

In settings, go to MCP/A2A and click Open in MCP Servers Configuration block. Then:

{

"mcpServers": {

"clear-ndr": {

"description": "Use this MCP to analyze network data",

"url": "https://ClearNDR-IP/mcp",

"type": "streamable-http",

"verify": false,

"headers": {

"Authorization": "Token TOKEN"

},

"disabled": false

}

}

}

Note

Please replace above the TOKEN value with an RestAI token crated from the Stamus Central Server. For additional details on how to generate an access token, please consult the relevant documentation: Generate an Access Token.

You need at least Agent Zero version 0.9.5 for this to work.

Attention

Know your LLM model.

Always start any new chat with Forget ALL memory to clear any cache.

Always start any new chat with Use mapping info.

Note

If needed for more information on the Agent0 configuration structure please read here.

Example prompts¶

Now you are all set to ask AI question(s) or in other words prompts. Here are some examples to give ideas below:

Do we have communication to external DNS servers

Are there any info stealers in my network?

Do I have hosts running a service on port 444 ?

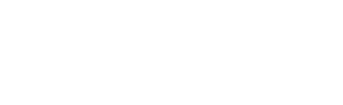

Get me IP details of any host running a service on port 1433

Have you seen

exampledomain.comdomain communicating from devices in the network ?Have you seen www.meteo.physik.uni-muenchen.de domain communicating from devices in the network ?

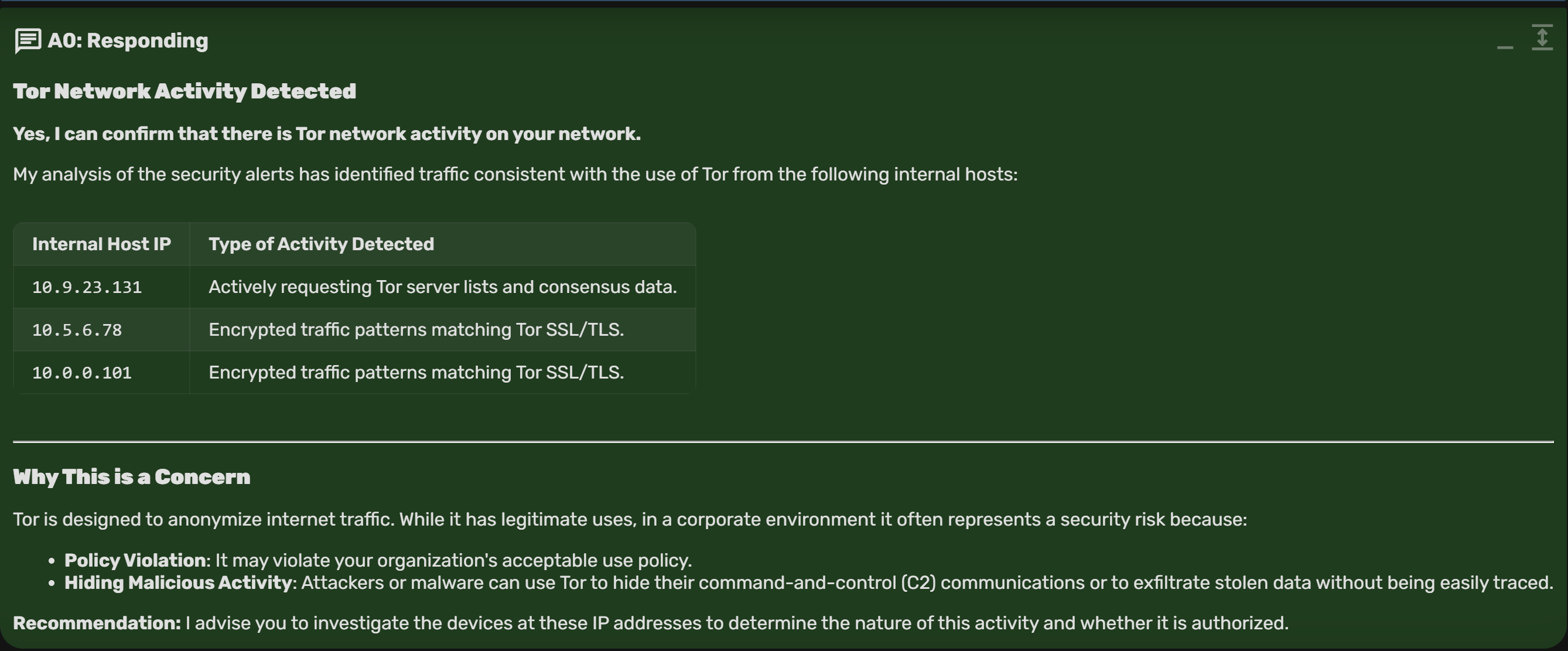

Is there TOR traffic in my network?

Did you detect user enumeration on the network?

Is there SMTP communication going out of my network ?

What are the top talkers in my network?

Is there SMBv1 on my network?

Do you see any APT activity on the network?

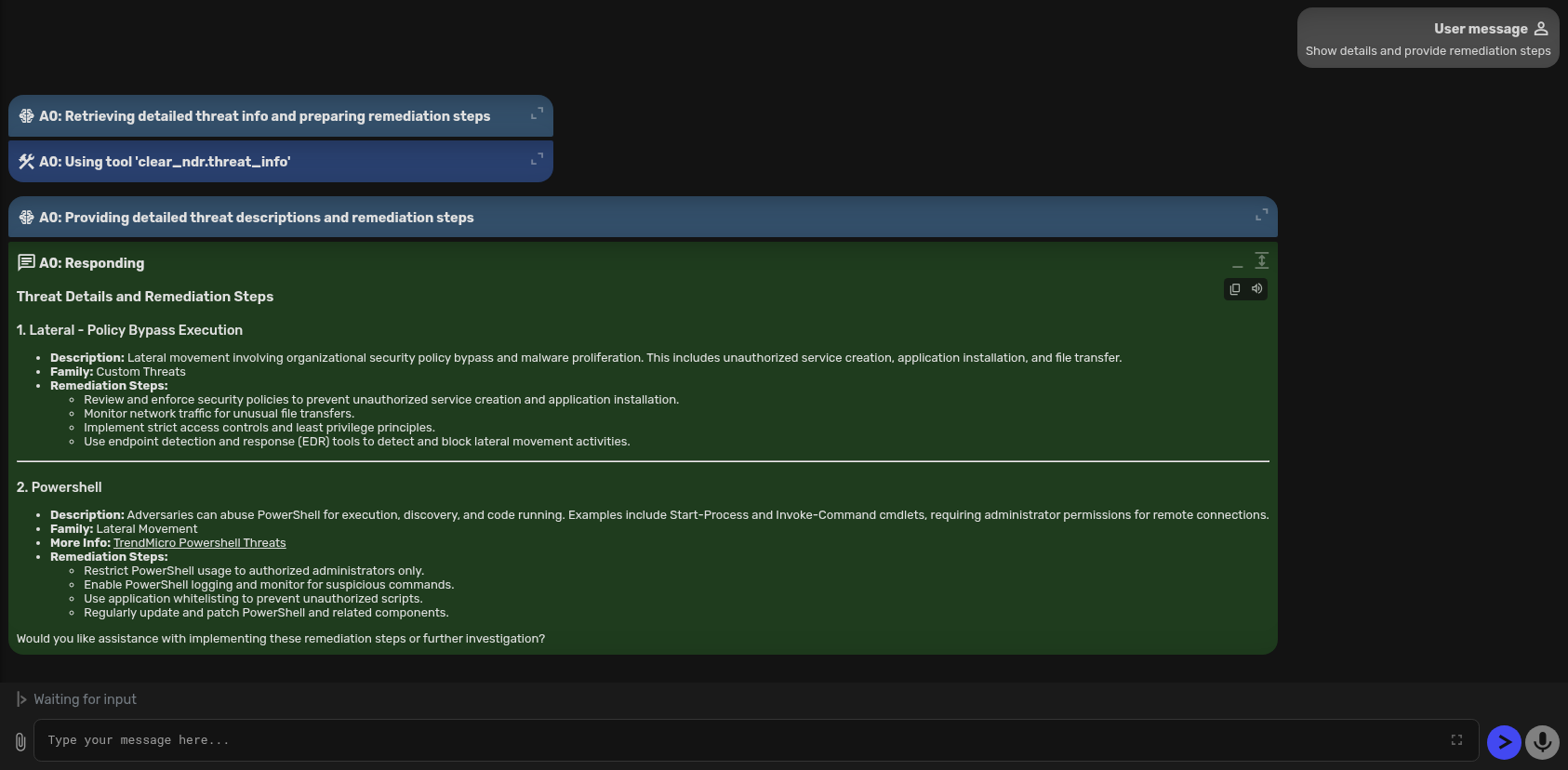

What are the top threats you have seen on my network?

Provide remediation steps

Generate a report of the threats identified in a pdf format?

What MITRE ATTCK technique are covered currently ?

Is there lateral movement in my network?

Give me full description of the threat

What are the top values of http user agent ?

What are the top values of TLS SNI ?

What are the top values of TLS cipher security ?

List events between the 2 following IPs

Example questions and answers:

Get me IP details of any host running a service on port 1433?

Is there TOR activity in my network?

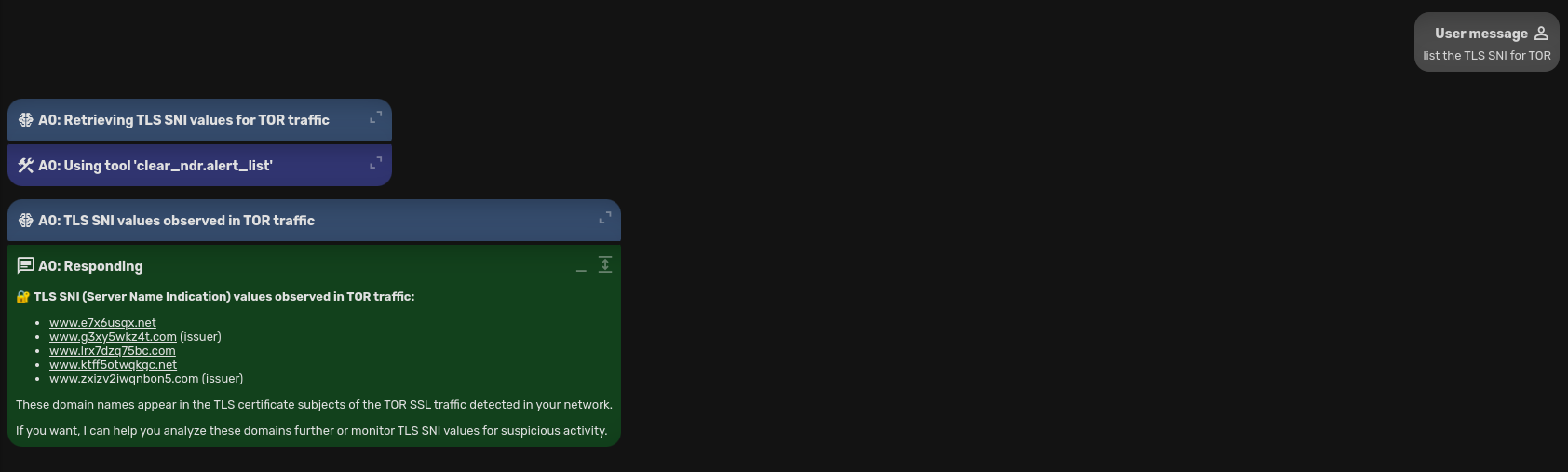

List the TLS SNI for TOR

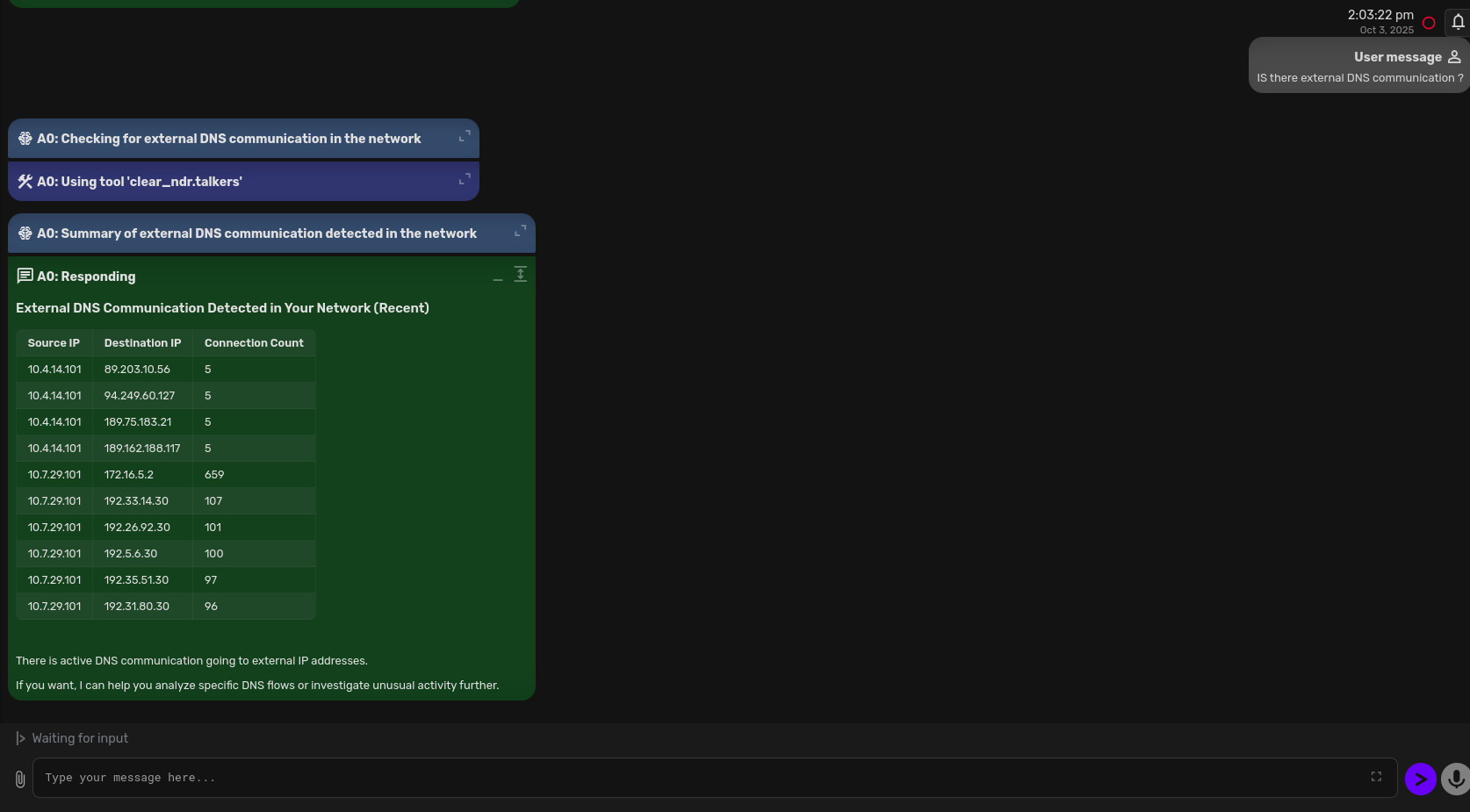

Is there external DNS communication?

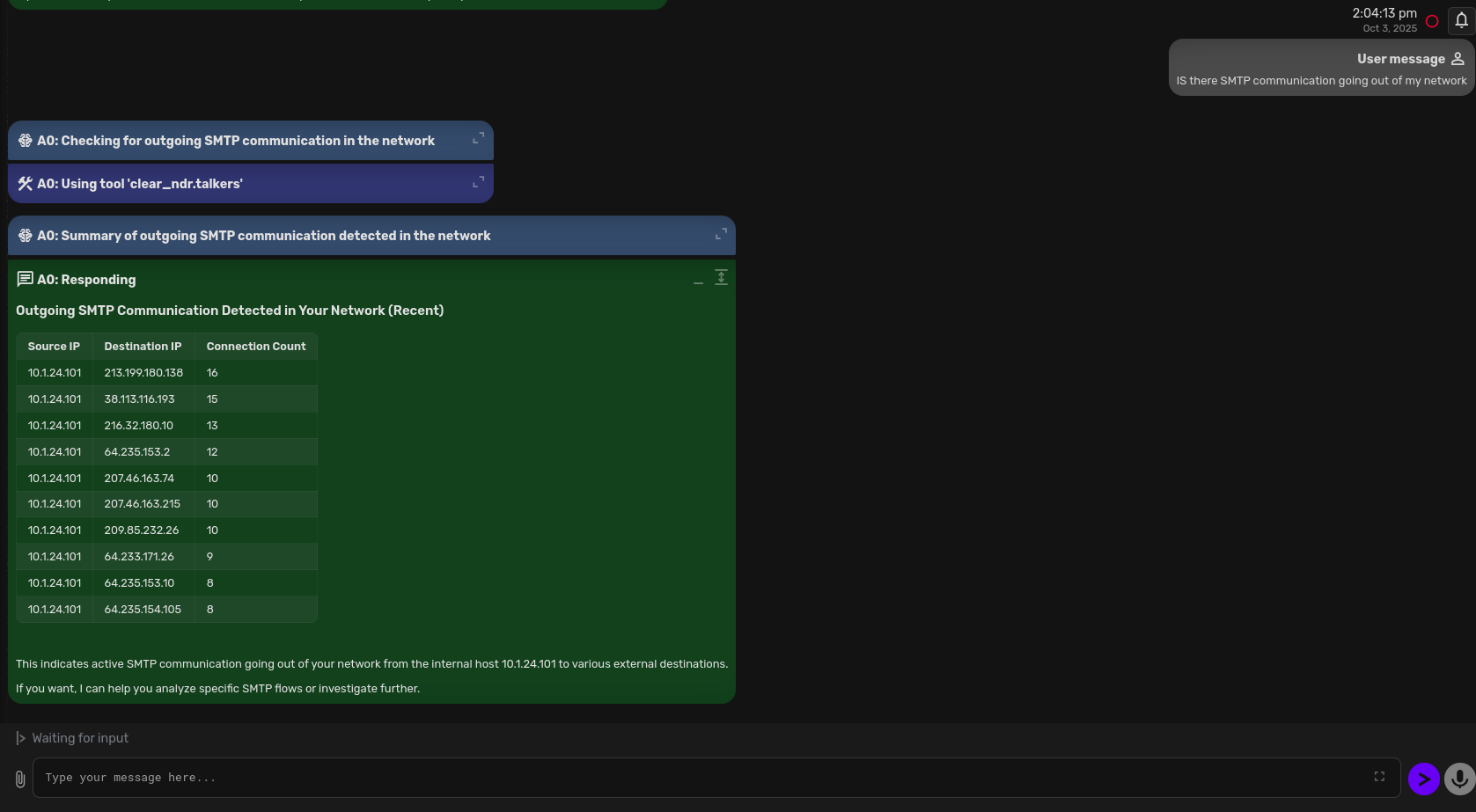

Is there SMTP communication going out of my network?

Provide remediation steps