Warning: You are viewing an older version of this documentation. Most recent is here: 42.0.0

Approaching Threat Hunting¶

This section describes the policy actions that one can create using the Stamus Hunting interface and what they are designed for.

Building your Cyber Defense with SCS¶

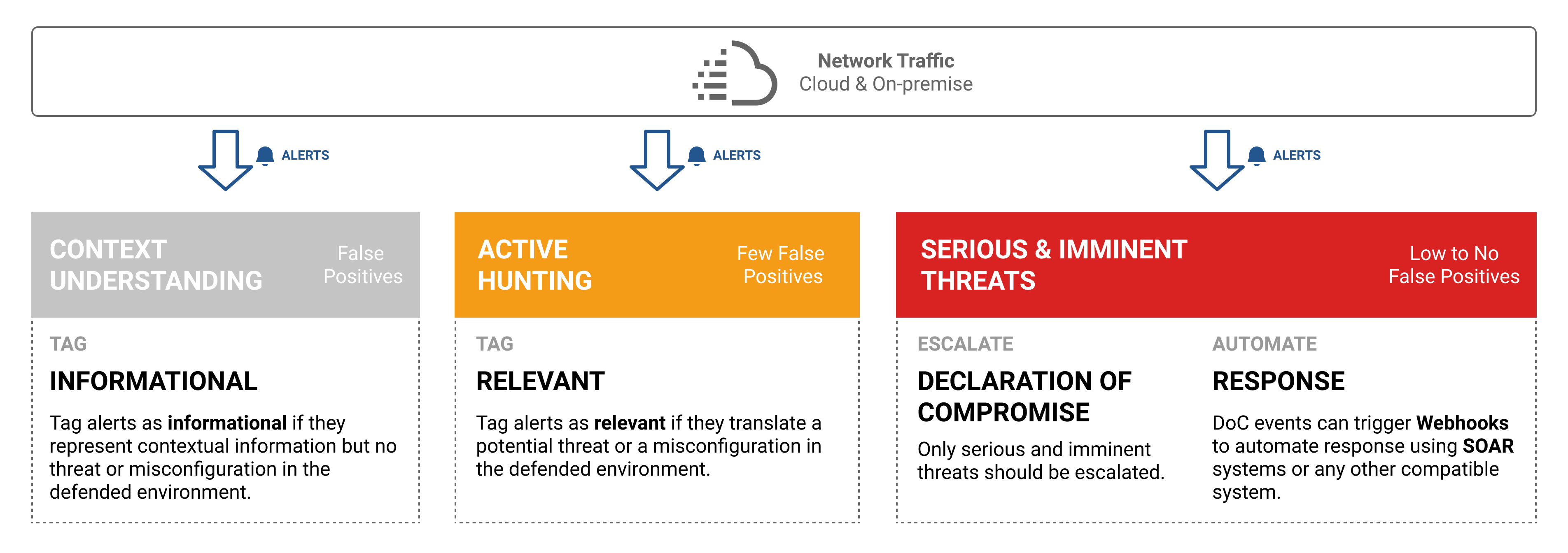

In order to decide which policy fits your environment best, it is important to understand how Stamus Central Server envisions cyber defense. The following diagram summarises the most important concepts.

To illustrate those concepts, 3 examples are detailed hereafter.

Context Understanding¶

Let’s assume an environment in which the ETPRO category of rules “POLICY” have been activated, such as the MS-EFSR detecting remote protocol activity on Microsoft Encrypting File System.

Those kinds of rules have two potential drawbacks. First, depending on the signature itself, it can be resource intensive. Second, it can also produce many alerts that aren’t necessarily describing an active threat because some rules look for indicators that are so wide that they may trigger under many circumstances.

Stamus Networks normally deactivates poorly written signatures that tend to be resource intensive. If a poorly written signature needs to be activated then make sure it is only activated temporarily.

If the signature is producing many alerts that are most of the time, if not all the time, false positives, then it is best to keep the signature activated and classify the associated alerts as informational. This will allow classifying such events, simply to describe that “this thing” was observed in the defended network at this point of time.

With that, in a later investigation, those alerts could reveal themselves useful in understanding a potential attack, but by themself, they do not provide any actionable insights to cyber security analysts.

Active Hunting¶

In any given environment, the download of executable files with wget is intriguing by nature because it is not something a user, nor an administrator, would normally do. An administrator could potentially do it under special circumstances.

With this postulate, we can assume that’s an intriguing behavior that would need further investigation to decide whether it’s legitimate or illegitimate in the defended context. This postulate also assumes that while this rule will provide very targeted results, a few false positives can be detected as such.

As a consequence, it is better to triage this behavior as relevant because that’s something the security team needs to be aware of for further qualification and contextualisation.

In that case, creating a policy action to classify those events as relevant is the most appropriate choice.

Serious & Imminent Threats¶

Let’s assume the very same example as the one described in Active Hunting: the download of executable files with wget. As previously detailed, this behavior is a behavior that needs further qualification to be acted as legit or harmful in a given context.

With that, let’s now assume that the defended context prohibits such behavior through its cyber security global policy. In that case, there is no question asked on whether this is OK or Not OK, it’s forbidden by the company rules.

When we have such a clear “no”, escalating the events to Threat Radar is the most appropriate choice because this console is first and foremost designed for SOC operations ; the entry point that deals with serious and imminent threats.

Another example could be a Suricata signature that detects a pattern that is so specific that the likelihood of false positives is extremely low. That’s the case for example of the ETPro rule SID 2032875 that detects the download of the offensive tool SharpNoPSExec used for Lateral Movement.

Note

When a STR Event is created it can trigger a Webhook action to post a message on a chat such as Slack or Mattermost, create a ticket in a ticketing system, start a playbook on a SOAR system, etc.

Policies Overview¶

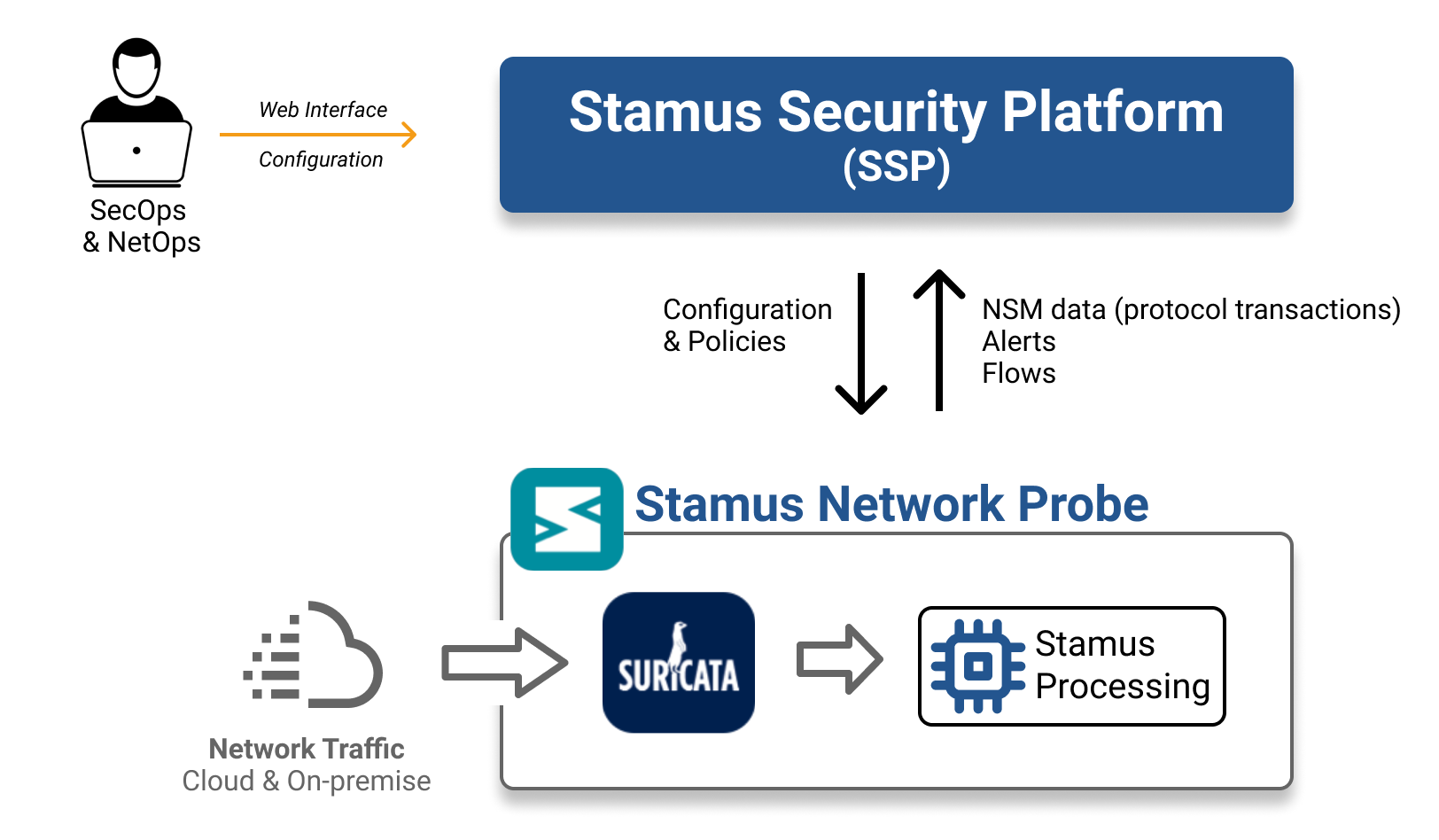

At a high level, Policies are centrally managed (created, edited, removed) from the Stamus Central Server (SCS). Policies are deployed by SCS to the Stamus Network Probes where they will be executed by the component Stamus Processing.

In a nutshell, Policies are designed to help triaging alerts automatically by establishing logical rules. There are 5 types of actions that can be performed with policies:

Suppression, to remove an alert

Thresholding, to keep an alert under certain conditions

Tagging, to enrich the alert with a tag

Escalating, to escalate an alert to Stamus Threat Radar

Emailing, to send an email

Almost all policy actions can use any fields, including metadata, from an alert. They are executed in sequence as they are ordered, from the first to the last.

Use Cases¶

At a high level, policies help address 3 situations:

Noise reduction (networks are noisy by definition) is achieved with actions of type Suppress or Threshold.

Events classification (hunting) is achieved with actions of type Tag or Tag & Keep.

Identification of serious and imminent threats (declaration of compromise) is achieved with actions of type Create a STR Event or Email.

Creating a Policy¶

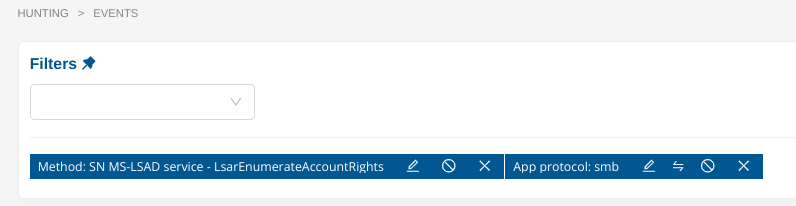

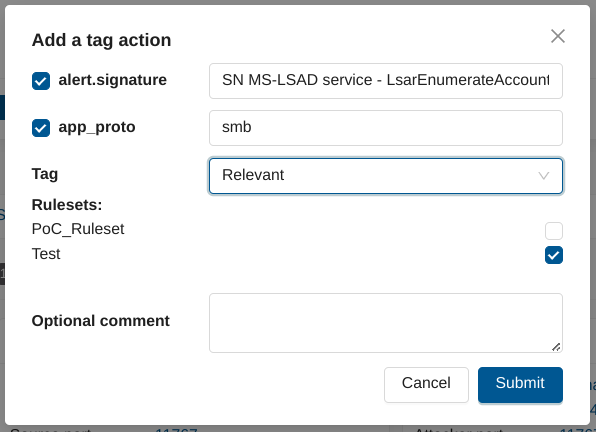

Once you have the desired filters in place that match the relevant events, click the Policy Actions button in the top right corner and select the kind of policy you want to create.

A pop-up will open: * Adjust the filters area as you see fit * Select the ruleset to which this new policy should be deployed to * Add any additional comment (for changes tracking for example) * Submit the new policy.

When the policy has been created, you will be automatically redirected to the Hunting / Policies page and optionally reorder the policy to best fit your environment. When created, a new policy will be positioned in the last position.

Now, all that is needed is to actually deploy this policy by pushing the ruleset to the concerned Stamus Network Probes.

To do that, simply click the “Update / Push ruleset” button in the upper right hand corner of the page.

Exporting/Importing a Policy and Filtersets¶

Once you have created your policies and custom filtersets, you can easily export them. You can do this from the Administration app.

From there, you have to go to Rulesets page. Under Action, click on Import/Export Policies/Filtersets link.

On the next page, you will be able to import and/or export you policies and filtersets.

Note

The file export/import format should be a tgz archive.

Policy Actions¶

Suppress¶

Alert suppression is a mechanism that will get rid of an alert without the need of manual intervention. The suppression is performed on Stamus Network Probes and the concerned alert never reaches SCS. The alert is simply disregarded and will not be stored.

Suppress action is terminative, which means that the alert won’t be evaluated by subsequent policies.

Alert suppression is mostly used to remove noisy alerts.

Note

There are 2 forms of alert suppression in SCS. The above mentioned suppression takes place when creating a suppress policy from Stamus Hunting interface. At the probe level, Stamus Processing is the component that will suppress the alert. This means that Suricata will still continue emitting the alert but Stamus Processing will filter it out.

Suricata alert’s suppression is also possible, through Stamus Probe Management and with less flexibility than through a policy action because alert’s metadata is not accessible (only by using signatures SID and source or destination IP address). This advanced approach, reserved to edge cases, is recommended in heavily loaded environments to save on system’s resources (CPU, memory) or to suppress a Threat Radar false positive.

Threshold¶

Alert thresholding is a mechanism that limits the number of received alerts (count) on a defined period of time (seconds). This computation is performed by Suricata.

For example, a filter such as “alert.signature: ET INFO Session Traversal Utilities for NAT (STUN Binding Request)” will match alerts for this specific signature and this specific alert tends to be quite verbose.

If a policy is created from that filter with the following attributes:

Count is 5

Seconds is 60

Track by Source

Then, SCS will receive a maximum of 5 alerts every minute based on the source IP address. If more alerts are produced in this time span, then the received alerts will be the first 5 emitted alerts. Subsequent alerts will be ignored.

Thresholding is generally used to lower the noise of alerts by ignoring their repetition in short periods of time.

Finally, it is important to note that thresholding only works with alert’s signatures – and so, metadata cannot be used to create threshold conditions.

As a rule of thumb, restraint from using wildcards in threshold policies for signature messages. It doesn’t mean you cannot or shouldn’t do it but just be careful when using wildcards in combinason of thresholding.

Tag¶

Alert tagging is a mechanism that will add an extra tag to an alert based on a defined filter. The tag is applied by Stamus Network Probes and so when the alert reaches SCS, the tag is already set.

Tag values can either be informational or relevant. The former is used to declare that the matching alerts are informative, providing contextual information, but aren’t representing any suspicious or weird behaviour. The latter is used to declare the matching alerts as interesting from a cybersecurity point of view ; they may not represent a serious threat but at least something that needs to be kept an eye on or that would need further investigation.

Tags are the direct translation of hunting work. They are meant to classify alerts in your context, easing the hunting operations.

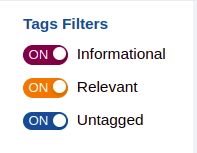

Stamus Hunting interface allows filtering alerts on tag basis, so it is possible to display only alerts that are tagged as relevant, or informative, or without a tag, or any combination of the three.

Finally, note that tagging isn’t a terminative action, which means that the alert will continue to be evaluated by subsequent policies. If subsequent tags are set, they will erase the previous one. To enforce a tag, see “Tag & Keep”.

Tag & Keep¶

Tag & Keep will perform alerts tagging just the same as regular tagging. The difference is that Tag & Keep will reinforce the tag, this means that any subsequent matching policy will not be able to alter the defined tag.

This is usually used when having hundreds or more policy actions and when it is difficult to keep track of every single one.

Email¶

The policy action Email will be available in the Stamus Hunting interface if a proper SMTP configuration has been set.

Similarly to thresholding, Email action cannot use alert’s metadata and only works with alert’s signature names.

To avoid being flooded with emails, SCS limits by default to 5 emails sent per signature per day. This setting can be adjusted under Probe Management.

Whenever possible, prefer using Webhooks instead of Email by using Custom STR Events.

Create a STR Event¶

The policy action Create a STR Event should be used when characterizing a serious and imminent threat (i.e. highly low false positive rate).

Such an STR Event, or Threat, will appear in the Stamus Threat Radar console for immediate action.

Once a STR Event is escalated, a webhook action can be triggered to post a message on chat application, open a support ticket, trigger a SOAR response and so on.

Summary¶

This table summarizes the different policies actions one can use and for what.

Policy Action |

Purpose |

Final |

Alert’s metadata |

|---|---|---|---|

Suppress |

Noise reduction |

Yes |

Yes |

Threshold |

Noise reduction |

Yes |

No |

Tag |

Classification |

No |

Yes |

Tag & Keep |

Classification |

Yes |

Yes |

Escalation |

No |

No |

|

Create STR Event |

Escalation |

No |

Yes |

Best Practices¶

Loose filters¶

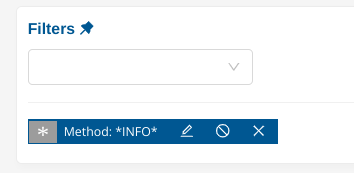

A filter that is not specific enough, as in the following example, will match a lot of alerts. That’s something that shouldn’t be done, regardless of the policy action chosen.

As a rule of thumb, a good filter candidate for a policy action is often a combination of at least 2 criteria.

However, using this kind of filters to explore the data is completely fine as long as it remains a manual investigation (SCS is designed for such use cases).

Reverse the logic of a threat to implement a filter¶

When writing filters associated with a policy action, it is best to try to reverse the logic of a threat or an attack. With this intent, it becomes possible to write specific and narrow enough filters to identify the desired threat.

Filters are best served with organizational context. For example, it is possible to use Network Definitions to narrow down the scope of a filter to specific areas of the defended network such as a business unit (i.e: accounting, dmz, domain controllers, etc).

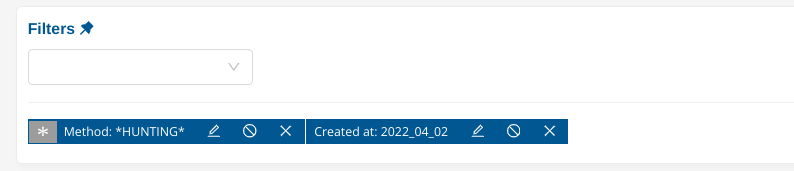

The following example illustrates a filter that is specific by listing all alerts that have been created in 2021 and from which the signature belongs to the Hunting category.

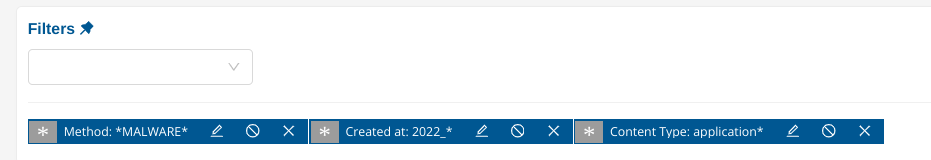

This other example lists all alerts created in 2021 from a signature having the term “exploit” in it and excluding all HTTP User-Agent Mozilla/5.0.

Note

SCS provides out-of-box filters, called Filters Set, and that’s the methodology we use to produce them. The intent is to offer filters that will produce a limited amount of false positives to start hunting right away (obviously dependent on the defended context). Those filters can be used and adjusted to your context and used as a foundation for policy actions.